Llama API for Developers

As RayMish Technology Solutions, we recognize the evolving artificial intelligence landscape and continuously seek optimal tools for our clients' mobile applications, web platforms, and AI-driven products. The newly introduced Llama API for Developers represents a significant advancement designed to streamline AI development while offering enhanced flexibility and capability. Our commitment remains providing businesses with state-of-the-art solutions.

The LlamaCon 2025 event sparked our consideration of implications for development professionals. Llama has already demonstrated substantial impact in open-source communities, exceeding one billion downloads. Development teams across sectors leverage Llama for innovation, operational improvements, and tackling complex challenges. The API launch significantly reduces barriers to AI application development and accessibility.

What's New: Llama API Unveiled

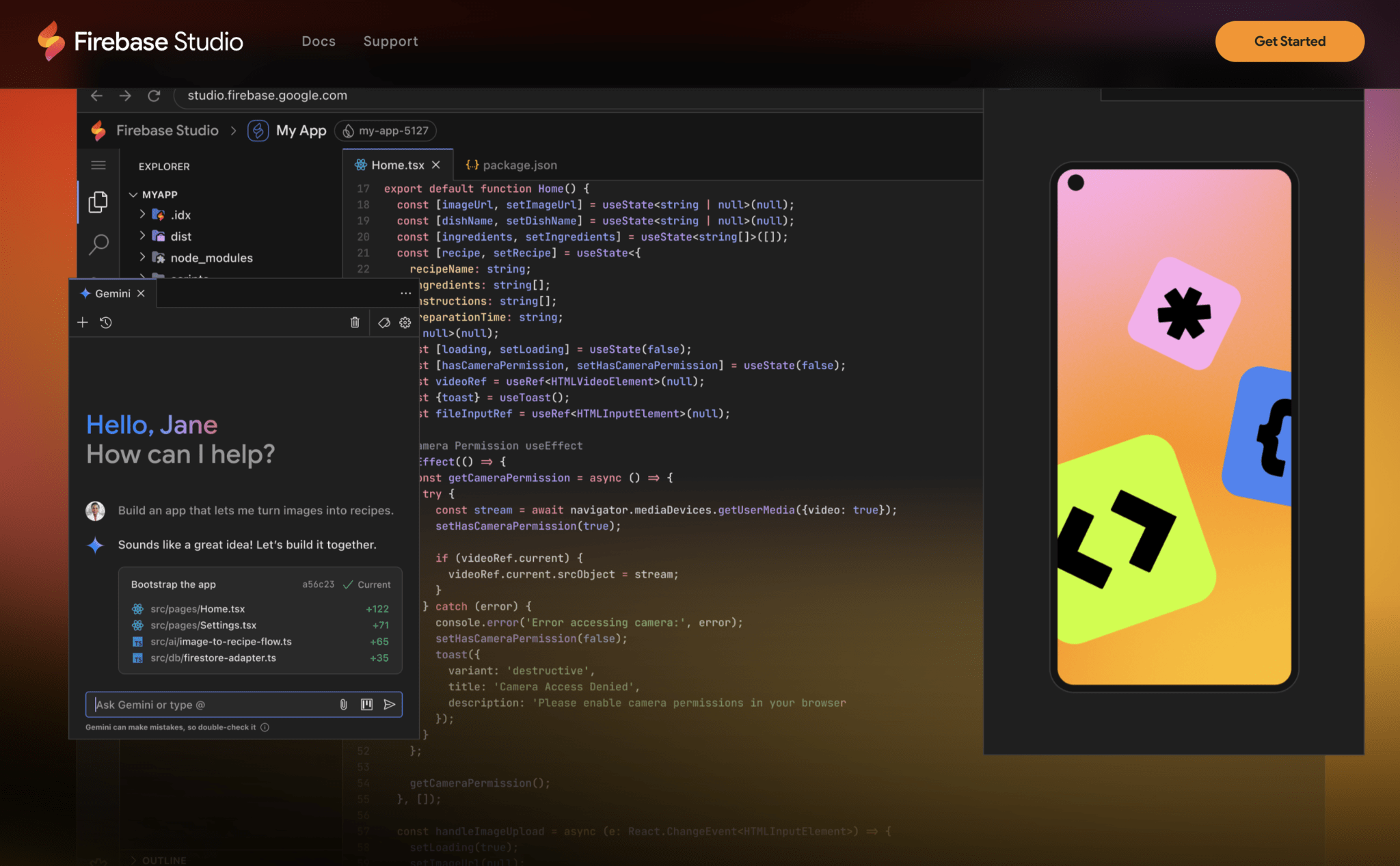

The primary announcement centers on the Llama API's limited preview launch. This platform merges the accessibility of commercial model APIs with the adaptability of open-source frameworks. At RayMish, we prioritize providing clients autonomy over their ventures. This API facilitates Llama model-based application development while preserving complete authority over model architectures and parameters—eliminating proprietary system constraints.

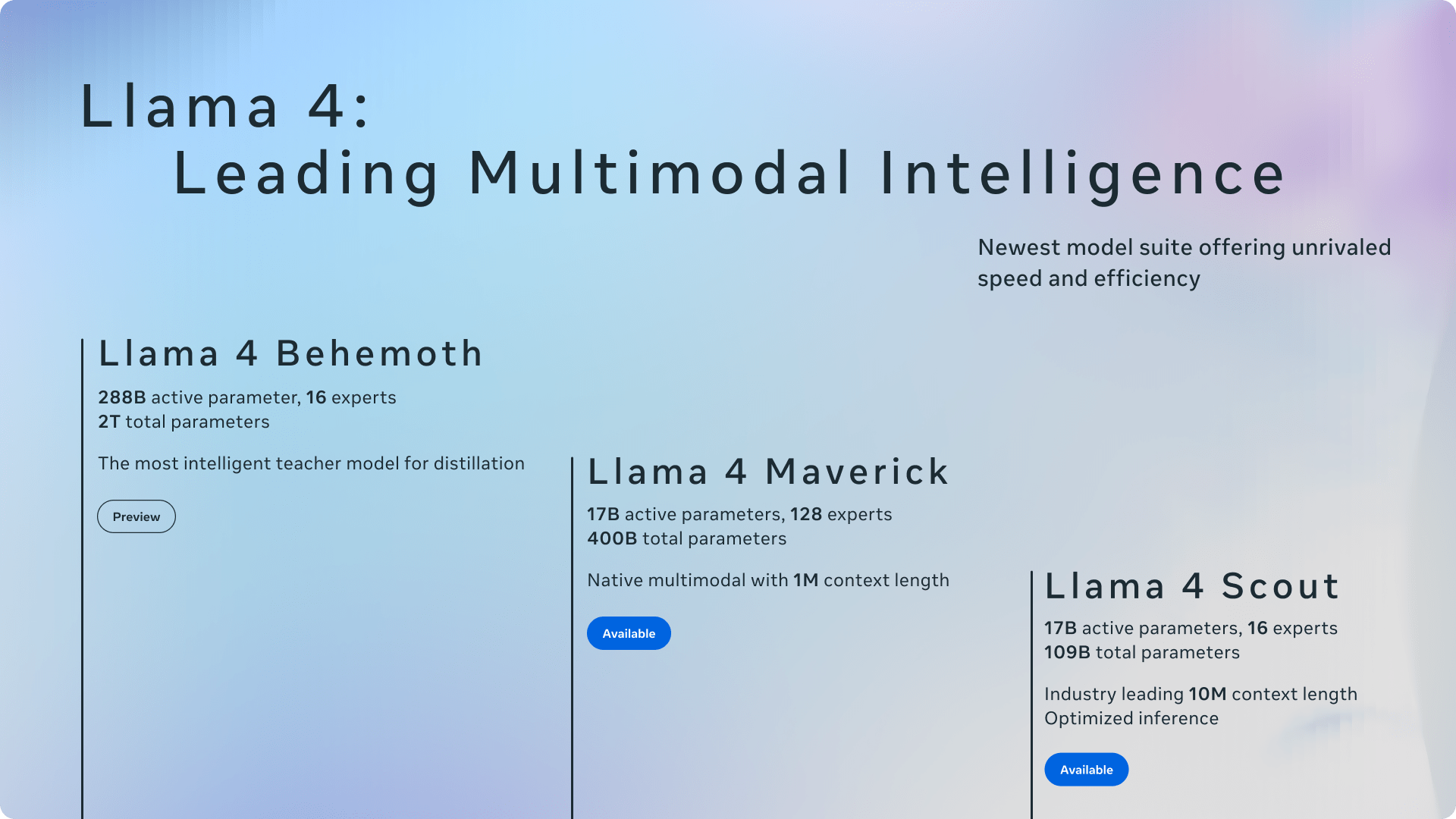

Notable features include simplified API key provisioning and experimental workspaces. These capabilities allow straightforward examination of various Llama model versions, including the recently unveiled Llama 4 Scout and Llama 4 Maverick. The platform incorporates streamlined libraries for Python and TypeScript implementations, offering compatibility with OpenAI SDK specifications. This compatibility enables seamless integration with established systems, minimizing implementation duration.

Customization and Efficiency: Fine-tuning and Evaluation

The Llama API extends beyond introductory capabilities through comprehensive fine-tuning and evaluation mechanisms. Developers can personalize iterations of the Llama 3.3 8B model variant. Such customization enables expense reduction alongside performance enhancement and improved precision—essential considerations for any initiative. The system permits synthetic information generation, model training operations, and systematic quality assessment through dedicated evaluation instruments.

This functionality matters considerably. It replaces speculative approaches with evidence-based methodology. Organizations can verify model effectiveness and guarantee alignment with particular requirements. Regarding information protection: user inputs and model outputs remain confidential and aren't employed for additional training purposes. Personalized iterations remain proprietary—deployable through your preferred infrastructure without vendor dependency.

Faster Inference with Cerebras and Groq

Computational velocity represents a fundamental concern in contemporary AI contexts. The cooperation between Cerebras and Groq excites us substantially. This collaboration significantly improves processing velocity for Llama API participants. Preliminary admission to Llama 4 installations utilizing these organizations' infrastructure is presently obtainable. This arrangement facilitates expedited exploration and validation preceding broader implementation.

The strategy emphasizes expanded alternatives and configuration possibilities. Designating Cerebras or Groq designations within the API grants immediate availability. The infrastructure streamlines operations, consolidating all application usage monitoring into a unified interface. This alliance reinforces commitment to cultivating inclusive advancement pathways, multiplying possibilities for Llama-based development. RayMish embraces empowerment strategies, recognizing this as a significant resource.

Llama Stack Integrations and Protection Tools

Developer communications highlighted the necessity for simplified deployment operations utilizing varied infrastructure providers. Contemporary initiatives broaden cooperative arrangements, incorporating recently completed Llama Stack incorporation with NVIDIA NeMo service components and concentrated cooperation with vendors including IBM, Red Hat, and Dell regarding forthcoming integrations to be revealed subsequently. Through collaborative partnerships, Llama Stack positioning becomes the reference framework for institutional environments pursuing dependable, market-prepared artificial intelligence implementations.

Fresh Llama safeguarding applications support the unrestricted development ecosystem, incorporating Llama Guard 4, LlamaFirewall, and Llama Prompt Guard 2. Supplementary announcements addressing organizational evaluation competencies for AI infrastructure performance in protective contexts include CyberSecEval 4 improvements plus the Llama Defenders Program targeting select institutional partners. As progressively sophisticated artificial intelligence systems emerge, this represents meaningful advancement toward improving software framework robustness.

Llama Impact Grants and the Future of Open Source AI

The Llama Impact Grants merit specific attention. These investments facilitate businesses, emerging organizations, and academic establishments leveraging Llama for consequential advancement. Disbursements totaling exceeding $1.5 million emphasize the transformative possibility of community-driven innovation.

Our positioning as unrestricted artificial intelligence innovators establishes the Llama environment as revolutionary. By means of Llama, development professionals and institutional entities obtain autonomy for unrestricted creation, avoiding proprietary restrictions or binding arrangements. This independence, accompanied by portability and approachability, positions Llama as the foremost alternative for maximizing artificial intelligence possibilities. Tomorrow belongs to open systems, and Llama leads this progression.

FAQs: Your Questions Answered

What is the Llama API?

The Llama API constitutes a development framework facilitating Llama model-based implementations. It delivers straightforward Llama model accessibility, customization resources, and expedited operational alternatives.

How can I access the Llama API?

The Llama API remains in preliminary testing. Prospective customers can petition for preliminary entry through the application procedure contained in the proclamation.

What are the benefits of using the Llama API?

Advantages encompass: instantaneous API authorization provisioning, cooperative testing facilities, compact programming libraries, customization and assessment resources, plus proprietorship and authority regarding your implementations and information.

Is my data secure when using the Llama API?

Affirmative. Information confidentiality and protection represent fundamental concerns. Customer inputs and algorithmic outputs remain protected and won't be incorporated into additional model improvement initiatives.

Conclusion

The Llama API represents meaningful advancement in community-driven artificial intelligence development. It furnishes an approachable framework, sophisticated resources, and unwavering dedication toward developer sovereignty and information safeguarding. RayMish recognizes transformational possibilities and anticipates witnessing platform capabilities benefiting our clientele and development communities worldwide. This constitutes another noteworthy progression within artificial intelligence evolution, and enthusiasm regarding participation remains substantial.